When someone sees something that isn’t there, people often refer to the experience as a hallucination.

Hallucinations occur when your sensory perception does not correspond to external stimuli.

Technologies that rely on artificial intelligence can have hallucinations, too.

When an algorithmic system generates information that seems plausible but is actually inaccurate or misleading, computer scientists call it an AI hallucination. Researchers have found these behaviors in different types of AI systems, from chatbots such as ChatGPT to image generators such as Dall-E to autonomous vehicles. We are information science researchers who have studied hallucinations in AI speech recognition systems.

Wherever AI systems are used in daily life, their hallucinations can pose risks. Some may be minor – when a chatbot gives the wrong answer to a simple question, the user may end up ill-informed. But in other cases, the stakes are much higher. From courtrooms where AI software is used to make sentencing decisions to health insurance companies that use algorithms to determine a patient’s eligibility for coverage, AI hallucinations can have life-altering consequences. They can even be life-threatening: Autonomous vehicles use AI to detect obstacles, other vehicles and pedestrians.

Making it up

Hallucinations and their effects depend on the type of AI system. With large language models – the underlying technology of AI chatbots – hallucinations are pieces of information that sound convincing but are incorrect, made up or irrelevant. An AI chatbot might create a reference to a scientific article that doesn’t exist or provide a historical fact that is simply wrong, yet make it sound believable.

In a 2023 court case, for example, a New York attorney submitted a legal brief that he had written with the help of ChatGPT. A discerning judge later noticed that the brief cited a case that ChatGPT had made up. This could lead to different outcomes in courtrooms if humans were not able to detect the hallucinated piece of information.

With AI tools that can recognize objects in images, hallucinations occur when the AI generates captions that are not faithful to the provided image. Imagine asking a system to list objects in an image that only includes a woman from the chest up talking on a phone and receiving a response that says a woman talking on a phone while sitting on a bench. This inaccurate information could lead to different consequences in contexts where accuracy is critical.

What causes hallucinations

Engineers build AI systems by gathering massive amounts of data and feeding it into a computational system that detects patterns in the data. The system develops methods for responding to questions or performing tasks based on those patterns.

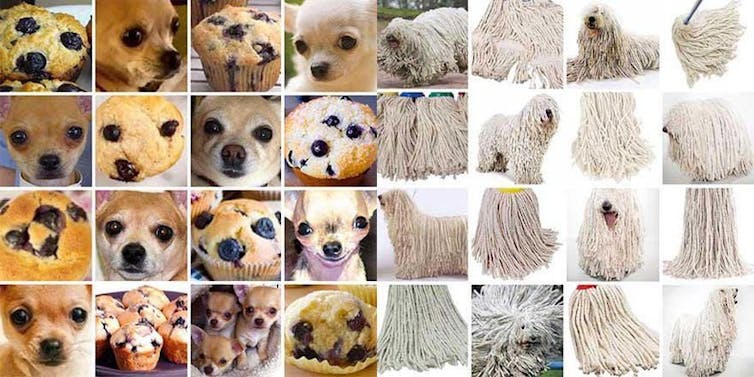

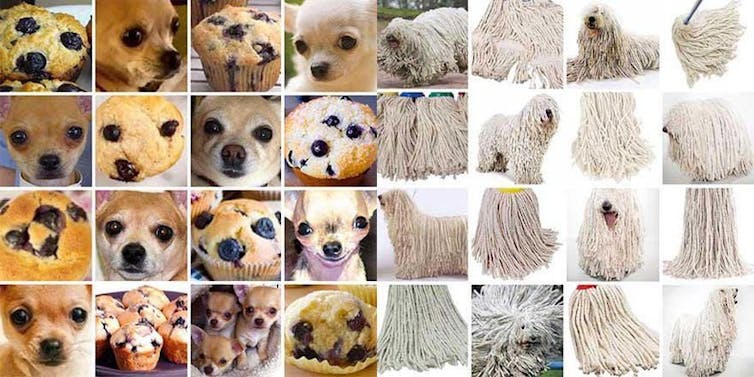

Supply an AI system with 1,000 photos of different breeds of dogs, labeled accordingly, and the system will soon learn to detect the difference between a poodle and a golden retriever. But feed it a photo of a blueberry muffin and, as machine learning researchers have shown, it may tell you that the muffin is a chihuahua.

Shenkman et al, CC BY

When a system doesn’t understand the question or the information that it is presented with, it may hallucinate. Hallucinations often occur when the model fills in gaps based on similar contexts from its training data, or when it is built using biased or incomplete training data. This leads to incorrect guesses, as in the case of the mislabeled blueberry muffin.

It’s important to distinguish between AI hallucinations and intentionally creative AI outputs. When an AI system is asked to be creative – like when writing a story or generating artistic images – its novel outputs are expected and desired. Hallucinations, on the other hand, occur when an AI system is asked to provide factual information or perform specific tasks but instead generates incorrect or misleading content while presenting it as accurate.

The key difference lies in the context and purpose: Creativity is appropriate for artistic tasks, while hallucinations are problematic when accuracy and reliability are required.

To address these issues, companies have suggested using high-quality training data and limiting AI responses to follow certain guidelines. Nevertheless, these issues may persist in popular AI tools.

What’s at risk

The impact of an output such as calling a blueberry muffin a chihuahua may seem trivial, but consider the different kinds of technologies that use image recognition systems: An autonomous vehicle that fails to identify objects could lead to a fatal traffic accident. An autonomous military drone that misidentifies a target could put civilians’ lives in danger.

For AI tools that provide automatic speech recognition, hallucinations are AI transcriptions that include words or phrases that were never actually spoken. This is more likely to occur in noisy environments, where an AI system may end up adding new or irrelevant words in an attempt to decipher background noise such as a passing truck or a crying infant.

As these systems become more regularly integrated into health care, social service and legal settings, hallucinations in automatic speech recognition could lead to inaccurate clinical or legal outcomes that harm patients, criminal defendants or families in need of social support.

Check AI’s work

Regardless of AI companies’ efforts to mitigate hallucinations, users should stay vigilant and question AI outputs, especially when they are used in contexts that require precision and accuracy. Double-checking AI-generated information with trusted sources, consulting experts when necessary, and recognizing the limitations of these tools are essential steps for minimizing their risks.![]()

![]()

Anna Choi, Ph.D. Candidate in Information Science, Cornell University and Katelyn Mei, Ph.D. Student in Information Science, University of Washington

This article is republished from The Conversation under a Creative Commons license. Read the original article.